# xcube_sh imports

from xcube_sh.cube import open_cube

from xcube_sh.config import CubeConfig

from xcube_sh.sentinelhub import SentinelHub

Sentinel-1#

Issues:

How to query by track number

bands ‘localIncidenceAngle’ and ‘shadowMask’ return an error

how to set the value for ‘orthorectify’ in the Processing Options (https://docs.sentinel-hub.com/api/latest/data/sentinel-1-grd/#processing-options)?

SentinelHub().band_names('S1GRD')

['VV', 'HH', 'VH', 'localIncidenceAngle', 'scatteringArea', 'shadowMask', 'HV']

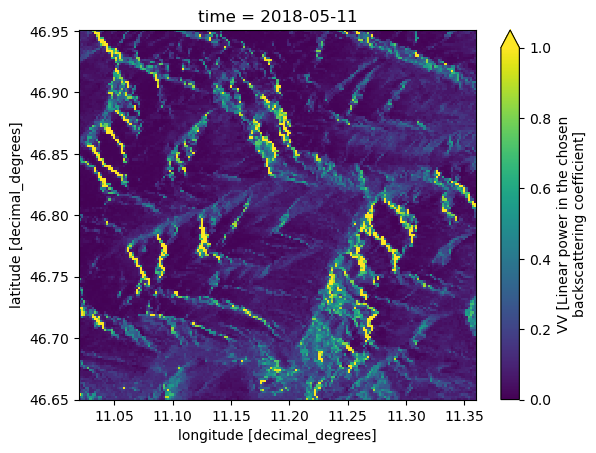

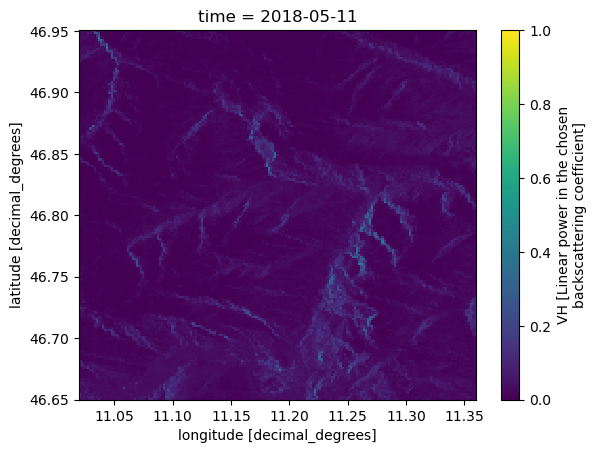

cube_config_s1 = CubeConfig(

dataset_name='S1GRD',

band_names=['VV', 'VH', 'localIncidenceAngle', 'shadowMask'],

bbox=[11.02, 46.65, 11.36, 46.95],

spatial_res=0.0018, # = 100 meters in degree>

time_range=['2018-02-01', '2018-06-30'],

time_period='6D'

)

cube_s1 = open_cube(cube_config_s1)

cube_s1

<xarray.Dataset>

Dimensions: (time: 25, lat: 167, lon: 189, bnds: 2)

Coordinates:

* lat (lat) float64 46.95 46.95 46.95 ... 46.65 46.65 46.65

* lon (lon) float64 11.02 11.02 11.02 ... 11.36 11.36 11.36

* time (time) datetime64[ns] 2018-02-04 ... 2018-06-28

time_bnds (time, bnds) datetime64[ns] dask.array<chunksize=(25, 2), meta=np.ndarray>

Dimensions without coordinates: bnds

Data variables:

VH (time, lat, lon) float32 dask.array<chunksize=(1, 167, 189), meta=np.ndarray>

VV (time, lat, lon) float32 dask.array<chunksize=(1, 167, 189), meta=np.ndarray>

localIncidenceAngle (time, lat, lon) float32 dask.array<chunksize=(1, 167, 189), meta=np.ndarray>

shadowMask (time, lat, lon) float32 dask.array<chunksize=(1, 167, 189), meta=np.ndarray>

Attributes: (12/13)

Conventions: CF-1.7

title: S1GRD Data Cube Subset

history: [{'program': 'xcube_sh.chunkstore.SentinelHubC...

date_created: 2023-03-07T06:54:16.049322

time_coverage_start: 2018-02-01T00:00:00+00:00

time_coverage_end: 2018-07-01T00:00:00+00:00

... ...

time_coverage_resolution: P6DT0H0M0S

geospatial_lon_min: 11.02

geospatial_lat_min: 46.65

geospatial_lon_max: 11.360199999999999

geospatial_lat_max: 46.9506

processing_level: L1B cube_s1.VV.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

<matplotlib.image.AxesImage at 0x7fcfae7c2970>

cube_s1.VH.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

<matplotlib.image.AxesImage at 0x7fcfac8551f0>

cube_s1.localIncidenceAngle.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

Failed to fetch data from Sentinel Hub after 44.90783905982971 seconds and 200 retries

HTTP status code was 400

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:2434, in LRUStoreCache.__getitem__(self, key)

2433 with self._mutex:

-> 2434 value = self._values_cache[key]

2435 # cache hit if no KeyError is raised

KeyError: 'localIncidenceAngle/16.0.0'

During handling of the above exception, another exception occurred:

HTTPError Traceback (most recent call last)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:645, in SentinelHubError.maybe_raise_for_response(cls, response)

644 try:

--> 645 response.raise_for_status()

646 except requests.HTTPError as e:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/requests/models.py:1021, in Response.raise_for_status(self)

1020 if http_error_msg:

-> 1021 raise HTTPError(http_error_msg, response=self)

HTTPError: 400 Client Error: Bad Request for url: https://services.sentinel-hub.com/api/v1/process

The above exception was the direct cause of the following exception:

SentinelHubError Traceback (most recent call last)

Cell In [7], line 1

----> 1 cube_s1.localIncidenceAngle.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/plot/plot.py:1311, in _plot2d.<locals>.plotmethod(_PlotMethods_obj, x, y, figsize, size, aspect, ax, row, col, col_wrap, xincrease, yincrease, add_colorbar, add_labels, vmin, vmax, cmap, colors, center, robust, extend, levels, infer_intervals, subplot_kws, cbar_ax, cbar_kwargs, xscale, yscale, xticks, yticks, xlim, ylim, norm, **kwargs)

1309 for arg in ["_PlotMethods_obj", "newplotfunc", "kwargs"]:

1310 del allargs[arg]

-> 1311 return newplotfunc(**allargs)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/plot/plot.py:1175, in _plot2d.<locals>.newplotfunc(darray, x, y, figsize, size, aspect, ax, row, col, col_wrap, xincrease, yincrease, add_colorbar, add_labels, vmin, vmax, cmap, center, robust, extend, levels, infer_intervals, colors, subplot_kws, cbar_ax, cbar_kwargs, xscale, yscale, xticks, yticks, xlim, ylim, norm, **kwargs)

1172 yval = yval.to_numpy()

1174 # Pass the data as a masked ndarray too

-> 1175 zval = darray.to_masked_array(copy=False)

1177 # Replace pd.Intervals if contained in xval or yval.

1178 xplt, xlab_extra = _resolve_intervals_2dplot(xval, plotfunc.__name__)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/dataarray.py:3574, in DataArray.to_masked_array(self, copy)

3560 def to_masked_array(self, copy: bool = True) -> np.ma.MaskedArray:

3561 """Convert this array into a numpy.ma.MaskedArray

3562

3563 Parameters

(...)

3572 Masked where invalid values (nan or inf) occur.

3573 """

-> 3574 values = self.to_numpy() # only compute lazy arrays once

3575 isnull = pd.isnull(values)

3576 return np.ma.MaskedArray(data=values, mask=isnull, copy=copy)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/dataarray.py:740, in DataArray.to_numpy(self)

729 def to_numpy(self) -> np.ndarray:

730 """

731 Coerces wrapped data to numpy and returns a numpy.ndarray.

732

(...)

738 DataArray.data

739 """

--> 740 return self.variable.to_numpy()

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/variable.py:1178, in Variable.to_numpy(self)

1176 # TODO first attempt to call .to_numpy() once some libraries implement it

1177 if hasattr(data, "chunks"):

-> 1178 data = data.compute()

1179 if isinstance(data, cupy_array_type):

1180 data = data.get()

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/base.py:315, in DaskMethodsMixin.compute(self, **kwargs)

291 def compute(self, **kwargs):

292 """Compute this dask collection

293

294 This turns a lazy Dask collection into its in-memory equivalent.

(...)

313 dask.base.compute

314 """

--> 315 (result,) = compute(self, traverse=False, **kwargs)

316 return result

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/base.py:600, in compute(traverse, optimize_graph, scheduler, get, *args, **kwargs)

597 keys.append(x.__dask_keys__())

598 postcomputes.append(x.__dask_postcompute__())

--> 600 results = schedule(dsk, keys, **kwargs)

601 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)])

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/threaded.py:89, in get(dsk, keys, cache, num_workers, pool, **kwargs)

86 elif isinstance(pool, multiprocessing.pool.Pool):

87 pool = MultiprocessingPoolExecutor(pool)

---> 89 results = get_async(

90 pool.submit,

91 pool._max_workers,

92 dsk,

93 keys,

94 cache=cache,

95 get_id=_thread_get_id,

96 pack_exception=pack_exception,

97 **kwargs,

98 )

100 # Cleanup pools associated to dead threads

101 with pools_lock:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:511, in get_async(submit, num_workers, dsk, result, cache, get_id, rerun_exceptions_locally, pack_exception, raise_exception, callbacks, dumps, loads, chunksize, **kwargs)

509 _execute_task(task, data) # Re-execute locally

510 else:

--> 511 raise_exception(exc, tb)

512 res, worker_id = loads(res_info)

513 state["cache"][key] = res

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:319, in reraise(exc, tb)

317 if exc.__traceback__ is not tb:

318 raise exc.with_traceback(tb)

--> 319 raise exc

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:224, in execute_task(key, task_info, dumps, loads, get_id, pack_exception)

222 try:

223 task, data = loads(task_info)

--> 224 result = _execute_task(task, data)

225 id = get_id()

226 result = dumps((result, id))

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/core.py:119, in _execute_task(arg, cache, dsk)

115 func, args = arg[0], arg[1:]

116 # Note: Don't assign the subtask results to a variable. numpy detects

117 # temporaries by their reference count and can execute certain

118 # operations in-place.

--> 119 return func(*(_execute_task(a, cache) for a in args))

120 elif not ishashable(arg):

121 return arg

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/array/core.py:127, in getter(a, b, asarray, lock)

122 # Below we special-case `np.matrix` to force a conversion to

123 # `np.ndarray` and preserve original Dask behavior for `getter`,

124 # as for all purposes `np.matrix` is array-like and thus

125 # `is_arraylike` evaluates to `True` in that case.

126 if asarray and (not is_arraylike(c) or isinstance(c, np.matrix)):

--> 127 c = np.asarray(c)

128 finally:

129 if lock:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:459, in ImplicitToExplicitIndexingAdapter.__array__(self, dtype)

458 def __array__(self, dtype=None):

--> 459 return np.asarray(self.array, dtype=dtype)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:623, in CopyOnWriteArray.__array__(self, dtype)

622 def __array__(self, dtype=None):

--> 623 return np.asarray(self.array, dtype=dtype)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:524, in LazilyIndexedArray.__array__(self, dtype)

522 def __array__(self, dtype=None):

523 array = as_indexable(self.array)

--> 524 return np.asarray(array[self.key], dtype=None)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/backends/zarr.py:76, in ZarrArrayWrapper.__getitem__(self, key)

74 array = self.get_array()

75 if isinstance(key, indexing.BasicIndexer):

---> 76 return array[key.tuple]

77 elif isinstance(key, indexing.VectorizedIndexer):

78 return array.vindex[

79 indexing._arrayize_vectorized_indexer(key, self.shape).tuple

80 ]

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:807, in Array.__getitem__(self, selection)

805 result = self.vindex[selection]

806 else:

--> 807 result = self.get_basic_selection(pure_selection, fields=fields)

808 return result

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:933, in Array.get_basic_selection(self, selection, out, fields)

930 return self._get_basic_selection_zd(selection=selection, out=out,

931 fields=fields)

932 else:

--> 933 return self._get_basic_selection_nd(selection=selection, out=out,

934 fields=fields)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:976, in Array._get_basic_selection_nd(self, selection, out, fields)

970 def _get_basic_selection_nd(self, selection, out=None, fields=None):

971 # implementation of basic selection for array with at least one dimension

972

973 # setup indexer

974 indexer = BasicIndexer(selection, self)

--> 976 return self._get_selection(indexer=indexer, out=out, fields=fields)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:1267, in Array._get_selection(self, indexer, out, fields)

1261 if not hasattr(self.chunk_store, "getitems") or \

1262 any(map(lambda x: x == 0, self.shape)):

1263 # sequentially get one key at a time from storage

1264 for chunk_coords, chunk_selection, out_selection in indexer:

1265

1266 # load chunk selection into output array

-> 1267 self._chunk_getitem(chunk_coords, chunk_selection, out, out_selection,

1268 drop_axes=indexer.drop_axes, fields=fields)

1269 else:

1270 # allow storage to get multiple items at once

1271 lchunk_coords, lchunk_selection, lout_selection = zip(*indexer)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:1966, in Array._chunk_getitem(self, chunk_coords, chunk_selection, out, out_selection, drop_axes, fields)

1962 ckey = self._chunk_key(chunk_coords)

1964 try:

1965 # obtain compressed data for chunk

-> 1966 cdata = self.chunk_store[ckey]

1968 except KeyError:

1969 # chunk not initialized

1970 if self._fill_value is not None:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:2442, in LRUStoreCache.__getitem__(self, key)

2438 self._values_cache.move_to_end(key)

2440 except KeyError:

2441 # cache miss, retrieve value from the store

-> 2442 value = self._store[key]

2443 with self._mutex:

2444 self.misses += 1

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:724, in KVStore.__getitem__(self, key)

723 def __getitem__(self, key):

--> 724 return self._mutable_mapping[key]

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:508, in RemoteStore.__getitem__(self, key)

506 value = self._vfs[key]

507 if isinstance(value, tuple):

--> 508 return self._fetch_chunk(key, *value)

509 return value

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:419, in RemoteStore._fetch_chunk(self, key, band_name, chunk_index)

411 observer(band_name=band_name,

412 chunk_index=chunk_index,

413 bbox=request_bbox,

414 time_range=request_time_range,

415 duration=duration,

416 exception=exception)

418 if exception:

--> 419 raise exception

421 return chunk_data

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:400, in RemoteStore._fetch_chunk(self, key, band_name, chunk_index)

398 try:

399 exception = None

--> 400 chunk_data = self.fetch_chunk(key,

401 band_name,

402 chunk_index,

403 bbox=request_bbox,

404 time_range=request_time_range)

405 except Exception as e:

406 exception = e

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:729, in SentinelHubChunkStore.fetch_chunk(self, key, band_name, chunk_index, bbox, time_range)

712 band_sample_types = band_sample_types[index]

714 request = SentinelHub.new_data_request(

715 self.cube_config.dataset_name,

716 band_names,

(...)

726 band_units=self.cube_config.band_units

727 )

--> 729 response = self._sentinel_hub.get_data(

730 request,

731 mime_type='application/octet-stream'

732 )

734 if response is None or not response.ok:

735 message = (f'{key}: cannot fetch chunk for variable'

736 f' {band_name!r}, bbox {bbox!r}, and'

737 f' time_range {time_range!r}')

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:432, in SentinelHub.get_data(self, request, mime_type)

430 raise response_error

431 elif response is not None:

--> 432 SentinelHubError.maybe_raise_for_response(response)

433 elif self.error_policy == 'warn' and self.enable_warnings:

434 if response_error:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:655, in SentinelHubError.maybe_raise_for_response(cls, response)

653 except Exception:

654 pass

--> 655 raise SentinelHubError(f'{e}: {detail}' if detail else f'{e}',

656 response=response) from e

SentinelHubError: 400 Client Error: Bad Request for url: https://services.sentinel-hub.com/api/v1/process

cube_s1.shadowMask.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

Failed to fetch data from Sentinel Hub after 39.48806810379028 seconds and 200 retries

HTTP status code was 400

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:2434, in LRUStoreCache.__getitem__(self, key)

2433 with self._mutex:

-> 2434 value = self._values_cache[key]

2435 # cache hit if no KeyError is raised

KeyError: 'shadowMask/16.0.0'

During handling of the above exception, another exception occurred:

HTTPError Traceback (most recent call last)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:645, in SentinelHubError.maybe_raise_for_response(cls, response)

644 try:

--> 645 response.raise_for_status()

646 except requests.HTTPError as e:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/requests/models.py:1021, in Response.raise_for_status(self)

1020 if http_error_msg:

-> 1021 raise HTTPError(http_error_msg, response=self)

HTTPError: 400 Client Error: Bad Request for url: https://services.sentinel-hub.com/api/v1/process

The above exception was the direct cause of the following exception:

SentinelHubError Traceback (most recent call last)

Cell In [8], line 1

----> 1 cube_s1.shadowMask.sel(time='2018-05-10', method='nearest').plot.imshow(vmin=0, vmax=1)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/plot/plot.py:1311, in _plot2d.<locals>.plotmethod(_PlotMethods_obj, x, y, figsize, size, aspect, ax, row, col, col_wrap, xincrease, yincrease, add_colorbar, add_labels, vmin, vmax, cmap, colors, center, robust, extend, levels, infer_intervals, subplot_kws, cbar_ax, cbar_kwargs, xscale, yscale, xticks, yticks, xlim, ylim, norm, **kwargs)

1309 for arg in ["_PlotMethods_obj", "newplotfunc", "kwargs"]:

1310 del allargs[arg]

-> 1311 return newplotfunc(**allargs)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/plot/plot.py:1175, in _plot2d.<locals>.newplotfunc(darray, x, y, figsize, size, aspect, ax, row, col, col_wrap, xincrease, yincrease, add_colorbar, add_labels, vmin, vmax, cmap, center, robust, extend, levels, infer_intervals, colors, subplot_kws, cbar_ax, cbar_kwargs, xscale, yscale, xticks, yticks, xlim, ylim, norm, **kwargs)

1172 yval = yval.to_numpy()

1174 # Pass the data as a masked ndarray too

-> 1175 zval = darray.to_masked_array(copy=False)

1177 # Replace pd.Intervals if contained in xval or yval.

1178 xplt, xlab_extra = _resolve_intervals_2dplot(xval, plotfunc.__name__)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/dataarray.py:3574, in DataArray.to_masked_array(self, copy)

3560 def to_masked_array(self, copy: bool = True) -> np.ma.MaskedArray:

3561 """Convert this array into a numpy.ma.MaskedArray

3562

3563 Parameters

(...)

3572 Masked where invalid values (nan or inf) occur.

3573 """

-> 3574 values = self.to_numpy() # only compute lazy arrays once

3575 isnull = pd.isnull(values)

3576 return np.ma.MaskedArray(data=values, mask=isnull, copy=copy)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/dataarray.py:740, in DataArray.to_numpy(self)

729 def to_numpy(self) -> np.ndarray:

730 """

731 Coerces wrapped data to numpy and returns a numpy.ndarray.

732

(...)

738 DataArray.data

739 """

--> 740 return self.variable.to_numpy()

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/variable.py:1178, in Variable.to_numpy(self)

1176 # TODO first attempt to call .to_numpy() once some libraries implement it

1177 if hasattr(data, "chunks"):

-> 1178 data = data.compute()

1179 if isinstance(data, cupy_array_type):

1180 data = data.get()

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/base.py:315, in DaskMethodsMixin.compute(self, **kwargs)

291 def compute(self, **kwargs):

292 """Compute this dask collection

293

294 This turns a lazy Dask collection into its in-memory equivalent.

(...)

313 dask.base.compute

314 """

--> 315 (result,) = compute(self, traverse=False, **kwargs)

316 return result

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/base.py:600, in compute(traverse, optimize_graph, scheduler, get, *args, **kwargs)

597 keys.append(x.__dask_keys__())

598 postcomputes.append(x.__dask_postcompute__())

--> 600 results = schedule(dsk, keys, **kwargs)

601 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)])

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/threaded.py:89, in get(dsk, keys, cache, num_workers, pool, **kwargs)

86 elif isinstance(pool, multiprocessing.pool.Pool):

87 pool = MultiprocessingPoolExecutor(pool)

---> 89 results = get_async(

90 pool.submit,

91 pool._max_workers,

92 dsk,

93 keys,

94 cache=cache,

95 get_id=_thread_get_id,

96 pack_exception=pack_exception,

97 **kwargs,

98 )

100 # Cleanup pools associated to dead threads

101 with pools_lock:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:511, in get_async(submit, num_workers, dsk, result, cache, get_id, rerun_exceptions_locally, pack_exception, raise_exception, callbacks, dumps, loads, chunksize, **kwargs)

509 _execute_task(task, data) # Re-execute locally

510 else:

--> 511 raise_exception(exc, tb)

512 res, worker_id = loads(res_info)

513 state["cache"][key] = res

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:319, in reraise(exc, tb)

317 if exc.__traceback__ is not tb:

318 raise exc.with_traceback(tb)

--> 319 raise exc

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/local.py:224, in execute_task(key, task_info, dumps, loads, get_id, pack_exception)

222 try:

223 task, data = loads(task_info)

--> 224 result = _execute_task(task, data)

225 id = get_id()

226 result = dumps((result, id))

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/core.py:119, in _execute_task(arg, cache, dsk)

115 func, args = arg[0], arg[1:]

116 # Note: Don't assign the subtask results to a variable. numpy detects

117 # temporaries by their reference count and can execute certain

118 # operations in-place.

--> 119 return func(*(_execute_task(a, cache) for a in args))

120 elif not ishashable(arg):

121 return arg

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/dask/array/core.py:127, in getter(a, b, asarray, lock)

122 # Below we special-case `np.matrix` to force a conversion to

123 # `np.ndarray` and preserve original Dask behavior for `getter`,

124 # as for all purposes `np.matrix` is array-like and thus

125 # `is_arraylike` evaluates to `True` in that case.

126 if asarray and (not is_arraylike(c) or isinstance(c, np.matrix)):

--> 127 c = np.asarray(c)

128 finally:

129 if lock:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:459, in ImplicitToExplicitIndexingAdapter.__array__(self, dtype)

458 def __array__(self, dtype=None):

--> 459 return np.asarray(self.array, dtype=dtype)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:623, in CopyOnWriteArray.__array__(self, dtype)

622 def __array__(self, dtype=None):

--> 623 return np.asarray(self.array, dtype=dtype)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/core/indexing.py:524, in LazilyIndexedArray.__array__(self, dtype)

522 def __array__(self, dtype=None):

523 array = as_indexable(self.array)

--> 524 return np.asarray(array[self.key], dtype=None)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xarray/backends/zarr.py:76, in ZarrArrayWrapper.__getitem__(self, key)

74 array = self.get_array()

75 if isinstance(key, indexing.BasicIndexer):

---> 76 return array[key.tuple]

77 elif isinstance(key, indexing.VectorizedIndexer):

78 return array.vindex[

79 indexing._arrayize_vectorized_indexer(key, self.shape).tuple

80 ]

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:807, in Array.__getitem__(self, selection)

805 result = self.vindex[selection]

806 else:

--> 807 result = self.get_basic_selection(pure_selection, fields=fields)

808 return result

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:933, in Array.get_basic_selection(self, selection, out, fields)

930 return self._get_basic_selection_zd(selection=selection, out=out,

931 fields=fields)

932 else:

--> 933 return self._get_basic_selection_nd(selection=selection, out=out,

934 fields=fields)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:976, in Array._get_basic_selection_nd(self, selection, out, fields)

970 def _get_basic_selection_nd(self, selection, out=None, fields=None):

971 # implementation of basic selection for array with at least one dimension

972

973 # setup indexer

974 indexer = BasicIndexer(selection, self)

--> 976 return self._get_selection(indexer=indexer, out=out, fields=fields)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:1267, in Array._get_selection(self, indexer, out, fields)

1261 if not hasattr(self.chunk_store, "getitems") or \

1262 any(map(lambda x: x == 0, self.shape)):

1263 # sequentially get one key at a time from storage

1264 for chunk_coords, chunk_selection, out_selection in indexer:

1265

1266 # load chunk selection into output array

-> 1267 self._chunk_getitem(chunk_coords, chunk_selection, out, out_selection,

1268 drop_axes=indexer.drop_axes, fields=fields)

1269 else:

1270 # allow storage to get multiple items at once

1271 lchunk_coords, lchunk_selection, lout_selection = zip(*indexer)

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/core.py:1966, in Array._chunk_getitem(self, chunk_coords, chunk_selection, out, out_selection, drop_axes, fields)

1962 ckey = self._chunk_key(chunk_coords)

1964 try:

1965 # obtain compressed data for chunk

-> 1966 cdata = self.chunk_store[ckey]

1968 except KeyError:

1969 # chunk not initialized

1970 if self._fill_value is not None:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:2442, in LRUStoreCache.__getitem__(self, key)

2438 self._values_cache.move_to_end(key)

2440 except KeyError:

2441 # cache miss, retrieve value from the store

-> 2442 value = self._store[key]

2443 with self._mutex:

2444 self.misses += 1

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/zarr/storage.py:724, in KVStore.__getitem__(self, key)

723 def __getitem__(self, key):

--> 724 return self._mutable_mapping[key]

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:508, in RemoteStore.__getitem__(self, key)

506 value = self._vfs[key]

507 if isinstance(value, tuple):

--> 508 return self._fetch_chunk(key, *value)

509 return value

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:419, in RemoteStore._fetch_chunk(self, key, band_name, chunk_index)

411 observer(band_name=band_name,

412 chunk_index=chunk_index,

413 bbox=request_bbox,

414 time_range=request_time_range,

415 duration=duration,

416 exception=exception)

418 if exception:

--> 419 raise exception

421 return chunk_data

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:400, in RemoteStore._fetch_chunk(self, key, band_name, chunk_index)

398 try:

399 exception = None

--> 400 chunk_data = self.fetch_chunk(key,

401 band_name,

402 chunk_index,

403 bbox=request_bbox,

404 time_range=request_time_range)

405 except Exception as e:

406 exception = e

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/chunkstore.py:729, in SentinelHubChunkStore.fetch_chunk(self, key, band_name, chunk_index, bbox, time_range)

712 band_sample_types = band_sample_types[index]

714 request = SentinelHub.new_data_request(

715 self.cube_config.dataset_name,

716 band_names,

(...)

726 band_units=self.cube_config.band_units

727 )

--> 729 response = self._sentinel_hub.get_data(

730 request,

731 mime_type='application/octet-stream'

732 )

734 if response is None or not response.ok:

735 message = (f'{key}: cannot fetch chunk for variable'

736 f' {band_name!r}, bbox {bbox!r}, and'

737 f' time_range {time_range!r}')

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:432, in SentinelHub.get_data(self, request, mime_type)

430 raise response_error

431 elif response is not None:

--> 432 SentinelHubError.maybe_raise_for_response(response)

433 elif self.error_policy == 'warn' and self.enable_warnings:

434 if response_error:

File /opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/sentinelhub.py:655, in SentinelHubError.maybe_raise_for_response(cls, response)

653 except Exception:

654 pass

--> 655 raise SentinelHubError(f'{e}: {detail}' if detail else f'{e}',

656 response=response) from e

SentinelHubError: 400 Client Error: Bad Request for url: https://services.sentinel-hub.com/api/v1/process

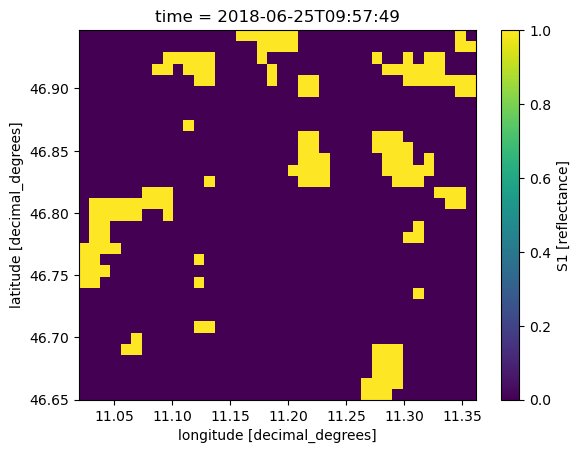

Sentinel-3 SLSTR#

Issues:

S1 band have only values of 0 and 1

SentinelHub().band_names('S3SLSTR')

['S1',

'S2',

'S3',

'S4',

'S4_A',

'S4_B',

'S5',

'S5_A',

'S5_B',

'S6',

'S6_A',

'S6_B',

'S7',

'S8',

'S9',

'F1',

'F2',

'CLOUD_FRACTION',

'SEA_ICE_FRACTION',

'SEA_SURFACE_TEMPERATURE',

'DEW_POINT',

'SKIN_TEMPERATURE',

'SNOW_ALBEDO',

'SNOW_DEPTH',

'SOIL_WETNESS',

'TEMPERATURE',

'TOTAL_COLUMN_OZONE',

'TOTAL_COLUMN_WATER_VAPOR']

cube_config_s3 = CubeConfig(

dataset_name='S3SLSTR',

band_names=['S1'],

bbox=[11.02, 46.65, 11.36, 46.95],

spatial_res=0.009, # = 500 meters in degree>

upsampling='BILINEAR',

downsampling='BILINEAR',

time_range=['2018-02-01', '2018-06-30']

)

/opt/conda/envs/edc-default-2022.10-14/lib/python3.9/site-packages/xcube_sh/config.py:248: FutureWarning: Units 'M', 'Y' and 'y' do not represent unambiguous timedelta values and will be removed in a future version.

time_tolerance = pd.to_timedelta(time_tolerance)

cube_s3 = open_cube(cube_config_s3, api_url="https://creodias.sentinel-hub.com")

cube_s3

<xarray.Dataset>

Dimensions: (time: 230, lat: 33, lon: 38, bnds: 2)

Coordinates:

* lat (lat) float64 46.94 46.93 46.92 46.92 ... 46.68 46.67 46.66 46.65

* lon (lon) float64 11.02 11.03 11.04 11.05 ... 11.33 11.34 11.35 11.36

* time (time) datetime64[ns] 2018-02-01T20:13:30 ... 2018-06-29T21:16:59

time_bnds (time, bnds) datetime64[ns] dask.array<chunksize=(230, 2), meta=np.ndarray>

Dimensions without coordinates: bnds

Data variables:

S1 (time, lat, lon) float32 dask.array<chunksize=(1, 33, 38), meta=np.ndarray>

Attributes:

Conventions: CF-1.7

title: S3SLSTR Data Cube Subset

history: [{'program': 'xcube_sh.chunkstore.SentinelHubChu...

date_created: 2023-03-07T06:52:28.574972

time_coverage_start: 2018-02-01T20:13:30.178000+00:00

time_coverage_end: 2018-06-29T21:17:14.559000+00:00

time_coverage_duration: P148DT1H3M44.381S

geospatial_lon_min: 11.02

geospatial_lat_min: 46.65

geospatial_lon_max: 11.362

geospatial_lat_max: 46.946999999999996

processing_level: L1Bcube_s3.S1.sel(time='2018-06-25', method='nearest').plot.imshow()

<matplotlib.image.AxesImage at 0x7f3be5b837c0>