cd $HOME

git clone https://github.uio.no/annefou/GEF4530.git

cd $HOME/GEF4530/setup

./gef4530_notur.bash

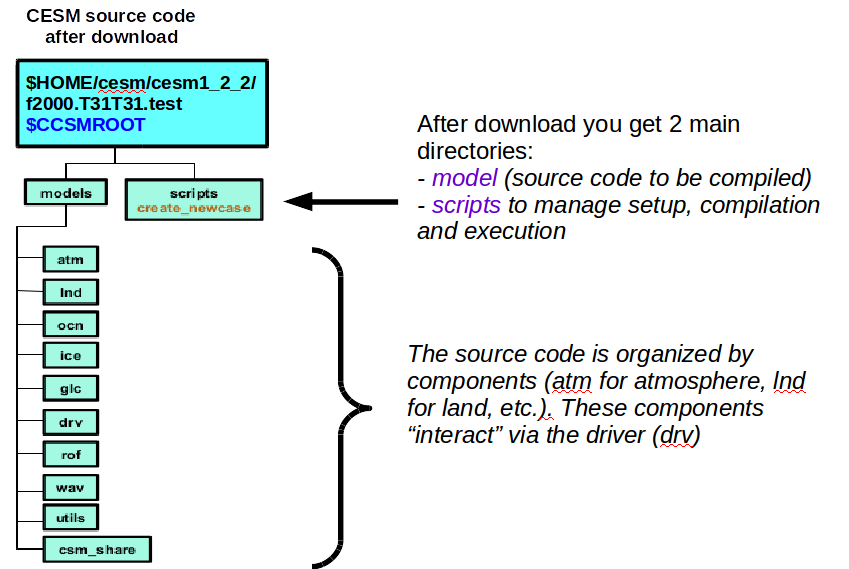

The script above copies the source code in $HOME/cesm/cesm_1_2_2 and creates symbolic links for the input data necessary to run our model configuration in /work/users/$USER/inputdata.

Input data can be large this is why we create symbolic links instead of making several copies (one per user). The main copy is located in /work/users/annefou/public/inputdata.

./create_newcase --help

cd $HOME/cesm/cesm1_2_2/scripts

#

# Simulation 1: short simulation

#

module load cesm/1.2.2

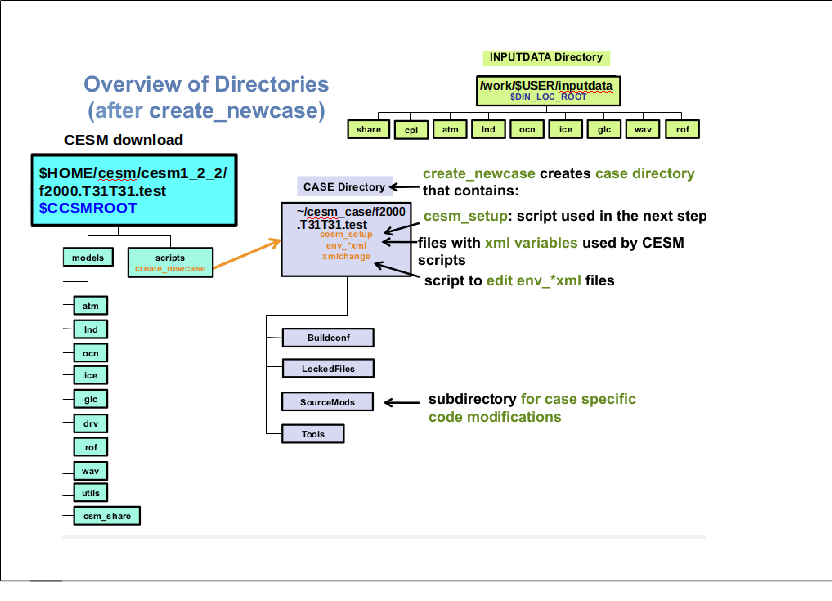

./create_newcase -case ~/cesm_case/f2000.T31T31.test -res T31_T31 -compset F_2000_CAM5 -mach abel

TIME_ATM[%phys]_LND[%phys]_ICE[%phys]_OCN[%phys]_ROF[%phys]_GLC[%phys]_WAV[%phys][_BGC%phys]

The compset longname has the specified order:

TIME = Time period (e.g. 2000, 20TR, RCP8...)

ATM = [CAM4, CAM5, DATM, SATM, XATM]

LND = [CLM40, CLM45, DLND, SLND, XLND]

ICE = [CICE, DICE, SICE, SICE]

OCN = [POP2, DOCN, SOCN, XOCN,AQUAP,MPAS]

ROF = [RTM, DROF, SROF, XROF]

GLC = [CISM1, SGLC, XGLC]

WAV = [WW3, DWAV, SWAV, XWAV]

BGC = optional BGC scenario

The OPTIONAL %phys attributes specify submodes of the given system

cd ~/cesm_case/f2000.T31T31.test

Check the content of the directory and browse the sub-directories:

./xmlchange RUN_TYPE=hybrid

./xmlchange RUN_REFCASE=f2000.T31T31.control

./xmlchange RUN_REFDATE=0009-01-01

We use xmlchange, a small script to update variables (such as RUN_TYPE, RUN_REFCASE, etc.) defined in xml files. All the xml files contained in your test case directory will be used by cesm_setup to generate your configuration setup (Fortran namelist, etc.):

ls *.xml

To change the duration of our test simulation and set it to 1 month:

./xmlchange -file env_run.xml -id STOP_N -val 1

./xmlchange -file env_run.xml -id STOP_OPTION -val nmonths

./cesm_setup

./f2000.T31T31.test.build

cat >> user_nl_cam << EOF

nhtfrq = -24

EOF

cat is a unix shell command to display the content of files or combine and create files. Using >> followed by a filename (here user_nl_cam) means we wish to concatenate information to a file. If it does not exist, it is automatically created. Using << followed by a string (here EOF) means that the content we wish to concatenate is not in a file but written after EOF until another EOF is found.

cat >> user_nl_cice << EOF

grid_file = '/work/users/$USER/inputdata/share/domains/domain.ocn.48x96_gx3v7_100114.nc'

kmt_file = '/work/users/$USER/inputdata/share/domains/domain.ocn.48x96_gx3v7_100114.nc'

EOF

scp login3.norstore.uio.no:/projects/NS1000K/GEF4530/outputs/runs/f2000.T31T31.control/run/f2000.T31T31.control.*.0009-01-01-00000.nc /work/users/$USER/f2000.T31T31.test/run/.

scp login3.norstore.uio.no:/projects/NS1000K/GEF4530/outputs/runs/f2000.T31T31.control/run/rpointer.* /work/users/$USER/f2000.T31T31.test/run/.

#SBATCH --job-name=f2000.T31T31.test

#SBATCH --time=08:59:00

#SBATCH --ntasks=32

#SBATCH --account=nn1000k

#SBATCH --mem-per-cpu=4G

#SBATCH --cpus-per-task=1

#SBATCH --output=slurm.out

./f2000.T31T31.test.submit

squeue -u $USER